S3, EBS and EFS which storage is best?

Introduction

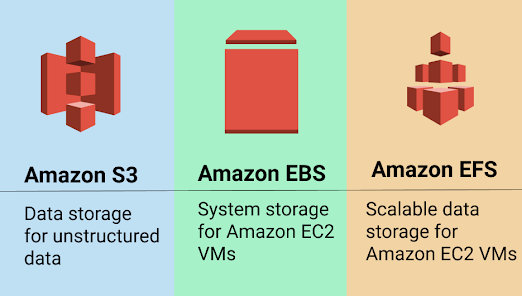

Amazon S3 is Simple Storage Service, EBS stands for Elastic Block Storge and EFS stands for Elastic File Systems, to determine which is best storage we need to understand what the features are available in each storage and their benefits. We can look at below topics in this blog.

- S3 Bucket

- S3 Bucket Folder

- S3 Object

- S3 Versioning

- S3 storage class

- S3 Bucket Policy and S3 Object ACL

- S3 Availability, Durability and Data Replication

- Elastic Block Storage

- EBS Features

- Elastic File Systems

- Which storage is best S3, EBS and EFS?

S3 Bucket

Amazon S3 (Simple Storage Service) is a cloud-based object storage service offered by Amazon Web Services (AWS). An S3 bucket is a container used to store objects (files, images, videos, etc.) in S3. Buckets are a fundamental concept in S3 and are used to organize and manage objects in the service.

Some key features of S3 buckets include:

- Scalability - S3 buckets are designed to scale to meet the needs of any application. You can store any number of objects in a bucket, and S3 can handle virtually unlimited storage capacity.

- Durability - S3 is designed for 99.999999999% (11 nines) durability, meaning that it is highly unlikely that you will ever lose an object stored in S3.

- Security - S3 provides several security features, including encryption in transit and at rest, access control, and compliance certifications.

- Flexibility - S3 provides a range of storage classes that allow you to optimize the cost and performance of storing your data, as well as features like lifecycle policies that enable you to automatically transition objects to lower-cost storage tiers or delete them when they are no longer needed.

- Integration with other AWS services - S3 integrates with many other AWS services, such as AWS Lambda, Amazon Glacier, and Amazon CloudFront, enabling you to build powerful and scalable applications.

S3 buckets are a versatile and powerful feature of AWS, providing a scalable, durable, and secure way to store and manage objects in the cloud.

S3 Bucket Folder

While S3 is an object-based storage service, it allows you to use a key-based hierarchy to organize your objects, which is similar to a file system's folder structure. In S3, folders are actually object key prefixes that allow you to group related objects together, making it easier to organize, manage and locate them.

When you create a new object in S3, you specify a unique object key that consists of a prefix, the object name, and an optional suffix. For example, if you want to store an image file in a folder called "images" within an S3 bucket called "my-bucket," you would create an object with the key prefix "images/" followed by the object name.

While S3 does not actually create a physical folder structure, the S3 management console and other AWS tools allow you to display the objects in a hierarchical manner based on their key prefixes, making it easier to navigate and manage your objects. You can also use S3's API or SDKs to programmatically interact with the objects in your bucket and use the key prefixes to group and organize your objects in a folder-like structure.

While S3 does not have a physical folder structure, it allows you to use key-based prefixes to create a logical hierarchy for your objects, providing a flexible and scalable way to organize and manage your data in the cloud.

S3 Object

In Amazon S3, an object is a fundamental unit of data that you store in a bucket. An object can be any type of data, such as a text file, an image, a video, or a database backup. Each object is identified by a unique key, which is a string that consists of a prefix, a delimiter, and the object name.

The S3 object key has the following components:

- Prefix - The prefix is a string that comes before the object name and is used to group related objects together. For example, if you have a set of images, you might use a prefix of "images/" to group them together.

- Delimiter - The delimiter is a character that you use to separate the prefix from the object name. By default, S3 uses the forward slash (/) as the delimiter.

- Object name - The object name is the name of the file or data that you are storing in the bucket.

An S3 object can be up to 5 terabytes in size and can be accessed over the internet using a unique URL provided by S3. S3 objects are stored redundantly across multiple devices and facilities to ensure durability and high availability. You can also control access to your objects using S3's built-in access control features, such as bucket policies, access control lists (ACLs), and IAM policies.

S3 objects are the basic building blocks of the S3 service, providing a scalable and durable way to store and manage any type of data in the cloud.

S3 Versioning

Amazon S3 Versioning is a feature that allows you to keep multiple versions of an object in the same bucket. When you enable versioning on a bucket, Amazon S3 automatically assigns a unique version ID to each new version of an object that you upload, and stores all versions of the object. You can also upload a new version of an object with the same key and S3 will automatically assign a new version ID.

Versioning can be useful for a variety of reasons, such as:

- Backup and recovery - With versioning enabled, you can recover previous versions of an object in case it is accidentally deleted or overwritten.

- Audit trail - Versioning allows you to keep a record of all changes to an object, including who made the change and when.

- Retention policies - With versioning, you can enforce retention policies that require you to keep multiple versions of an object for a specific period of time.

Versioning is enabled at the bucket level, which means that all objects in the bucket will be versioned once versioning is enabled. You can also use S3 Lifecycle rules to automatically transition older versions of objects to lower-cost storage classes or delete them when they are no longer needed.

It's important to note that enabling versioning on a bucket can have implications for data storage costs, as well as access control and permissions management. Therefore, it's important to carefully consider the use cases for versioning and understand how it interacts with other S3 features and services.

S3 storage class

Amazon S3 storage classes are a set of different storage tiers designed to help you optimize your data storage costs and access patterns based on your specific use case. Each storage class provides a different combination of performance, durability, availability, and cost.

The following are the different S3 storage classes:

- S3 Standard - This is the default storage class and provides high durability, availability, and performance for frequently accessed data.

- S3 Intelligent-Tiering - This storage class uses machine learning to automatically move objects between two access tiers based on changing access patterns. This provides the best of both worlds: frequent access to objects when needed and cost savings when access patterns change.

- S3 Standard-Infrequent Access (S3 Standard-IA) - This storage class is designed for data that is accessed less frequently, but still requires low latency access. It provides a lower storage cost compared to S3 Standard, but has a retrieval fee for accessing data.

- S3 One Zone-Infrequent Access (S3 One Zone-IA) - This storage class is similar to S3 Standard-IA but is stored in a single availability zone instead of multiple zones, providing lower storage costs but less durability.

- S3 Glacier - This storage class is designed for data archiving and long-term backup. It provides low storage costs but has a longer retrieval time for accessing data.

- S3 Glacier Deep Archive - This storage class is similar to S3 Glacier, but is designed for long-term retention and rarely accessed data. It provides the lowest storage cost but has a longer retrieval time for accessing data.

You can use lifecycle policies to transition objects between different storage classes based on their age, access patterns, or other criteria. This allows you to automatically optimize your data storage costs and access patterns over time, without manual intervention.

S3 Bucket Policy and S3 Object ACL

Amazon S3 provides two primary mechanisms for controlling access to S3 resources: bucket policies and object access control lists (ACLs).

S3 Bucket Policy - A bucket policy is a JSON-based policy document that defines the permissions for the entire S3 bucket. Bucket policies allow you to specify which users or accounts can perform specific actions on the bucket or its contents, such as read, write, or delete operations. Bucket policies are a powerful and flexible way to control access to your S3 resources, but they can be complex to manage and should be thoroughly tested before implementation.

S3 Object ACL - An object access control list (ACL) is a set of permissions that apply to a specific S3 object. Object ACLs allow you to grant permissions to individual users or groups to perform specific actions on the object, such as read, write, or delete operations. Object ACLs can be useful for granting temporary or ad-hoc access to specific objects within a bucket, but can be difficult to manage at scale.

It's important to note that both bucket policies and object ACLs can be used together to provide fine-grained access control for your S3 resources. Additionally, Amazon S3 integrates with AWS Identity and Access Management (IAM) to provide centralized access control management for your AWS resources, including S3 buckets and objects. By using IAM, you can create and manage users, groups, and roles, and assign granular permissions to control access to S3 resources.

S3 bucket policies and object ACLs provide complementary mechanisms for controlling access to S3 resources, and the appropriate use of each will depend on the specific requirements of your use case.

Amazon S3 provides high availability, durability, and data replication to ensure that your data is always available and protected.

Availability - Amazon S3 is designed to provide high availability by automatically distributing your data across multiple geographically dispersed data centers. This ensures that your data is always available even if one or more data centers experience an outage or interruption.

Durability - Amazon S3 is designed to provide high durability by automatically replicating your data across multiple devices within each data center. This ensures that your data is protected against hardware failures, data corruption, and other types of data loss.

Data Replication - Amazon S3 provides several options for replicating your data across multiple regions or locations to provide even greater availability and durability. You can use Cross-Region Replication to replicate your data across multiple regions, or use Same-Region Replication to replicate your data across multiple Availability Zones within the same region. Additionally, Amazon S3 supports multiple storage classes with different durability and availability characteristics, allowing you to choose the level of protection that best meets your needs.

Amazon S3 provides a highly available, durable, and scalable storage solution that is designed to meet the needs of a wide range of applications and use cases. By leveraging Amazon S3's built-in replication and availability features, you can ensure that your data is always available and protected against data loss.

Elastic Block Storage

Amazon Elastic Block Store (EBS) is a block-level storage service that provides persistent storage for Amazon EC2 instances. It allows you to create and attach persistent block storage volumes to your EC2 instances, similar to a physical hard drive.

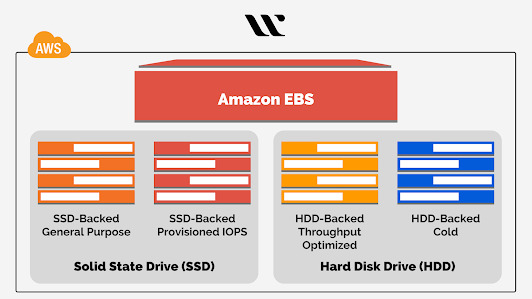

EBS volumes can be created in different types to optimize performance and cost for different workloads. The available types include.

- General Purpose SSD (gp2) - This is the default volume type and provides balanced performance and cost for most workloads.

- Provisioned IOPS SSD (io1) - This volume type is designed for high-performance workloads that require low latency and high I/O operations per second (IOPS).

- Throughput Optimized HDD (st1) - This volume type is designed for large, sequential workloads that require high throughput and low cost.

- Cold HDD (sc1) - This volume type is designed for infrequent access workloads that require low cost and infrequent access.

EBS volumes can be attached and detached from EC2 instances on demand, allowing you to easily add and remove storage as needed. You can also take snapshots of EBS volumes, which create a point-in-time backup of the volume that can be used to create new volumes or restore existing volumes.

Each volume type has its own performance characteristics and pricing model. Choosing the right volume type for your workload can help optimize performance and cost. Additionally, EBS also offers Elastic Volumes, which allow you to easily adjust the size, performance, and volume type of your volumes without having to manually migrate data or take your instances offline.

Amazon EBS provides a reliable, durable, and scalable storage solution for Amazon EC2 instances, allowing you to easily add and remove storage as needed and optimize performance and cost for different workloads.

EBS Features

Amazon Elastic Block Store (EBS) provides a number of features to help you manage and optimize your block storage volumes:

- Volume types - EBS offers multiple volume types to optimize performance and cost for different workloads, including General Purpose SSD, Provisioned IOPS SSD, Throughput Optimized HDD, and Cold HDD.

- Snapshots - EBS allows you to take point-in-time snapshots of your volumes, which can be used to create new volumes or restore existing volumes. Snapshots are incremental, meaning that only the changes since the last snapshot are saved, which helps to minimize storage costs.

- Encryption - EBS volumes can be encrypted at rest using AWS Key Management Service (KMS), providing an additional layer of security for your data.

- Elastic Volumes - EBS Elastic Volumes allow you to easily adjust the size, performance, and volume type of your volumes without having to manually migrate data or take your instances offline.

- Lifecycle policies - EBS provides lifecycle policies that allow you to automate the process of creating and deleting snapshots based on predefined rules, which helps to optimize storage costs and simplify snapshot management.

- Multi-Attach - EBS Multi-Attach allows you to attach a single EBS volume to multiple instances simultaneously, which can be useful for distributed applications and cluster file systems.

- Bursting - EBS volumes support burst performance, which allows the volume to automatically increase its performance when needed to meet the I/O requirements of your applications.

EBS provides a wide range of features and capabilities to help you optimize your block storage volumes for performance, cost, and reliability. By leveraging these features, you can ensure that your applications have the storage resources they need to operate effectively and efficiently.

Elastic File System

What is a file system?

A file system is a method for storing and organizing computer files and directories on a storage device, such as a hard disk drive, solid-state drive, or network-attached storage (NAS).

A file system provides a way for users and applications to access and manage files on a storage device. It defines the structure of files and directories, how they are stored and organized, and how they can be accessed and modified.

Some common file systems include NTFS (used in Windows), HFS+ (used in macOS), and ext4 (used in many Linux distributions). File systems can also be used in conjunction with cloud storage services like Amazon S3.

In addition to organizing files and directories, file systems also provide features such as permissions, encryption, compression, and journaling. They may also have different performance characteristics, such as read and write speeds, depending on the specific implementation and hardware used.

What is EFS?

Amazon Elastic File System (EFS) is a cloud-based file storage service provided by Amazon Web Services (AWS). EFS provides a scalable, managed file system that can be accessed by multiple instances running within a VPC (Virtual Private Cloud). EFS uses the Network File System (NFS) protocol, which allows you to mount file systems on Linux and Unix instances, and makes it easy to share files across instances and users.

Some key features of Amazon EFS include:

- Scalability - EFS is designed to scale automatically as your storage needs grow, with no limit on the amount of data you can store or the number of files you can store within a file system.

- High availability - EFS is designed for high availability and durability, with multiple copies of your data stored across multiple Availability Zones within a region.

- Performance - EFS provides consistent, low-latency performance with high throughput and low I/O latency. It supports a wide range of access patterns, from small I/O requests to large, streaming I/O requests.

- Security - EFS provides multiple layers of security, including network isolation, encryption at rest and in transit, and access controls based on AWS Identity and Access Management (IAM).

Integration with other AWS services: EFS integrates with other AWS services, such as Amazon EC2 and AWS Lambda, making it easy to store and access data across your entire application stack.

Amazon EFS provides a highly scalable, highly available, and highly performant file storage solution that is well-suited for a wide range of use cases, including web serving, content management, and big data processing.

Which storage is best S3, EBS and EFS?

There is no "one size fits all" answer to which storage service is best among Amazon S3, EBS, and EFS, as it depends on your specific use case and requirements. Each service has its own strengths and use cases, and you should evaluate them based on your needs.

Here are some general characteristics and use cases for each service:

Amazon S3 - Amazon Simple Storage Service (S3) is a highly scalable and durable object storage service that is designed for storing and retrieving large amounts of unstructured data. S3 is suitable for a wide range of use cases, such as backups, archives, content distribution, media storage, and big data analytics. S3 is also a good choice if you need to access your data from anywhere over the internet or if you need to store data for long-term retention.

Amazon EBS - Amazon Elastic Block Store (EBS) is a block-level storage service that is designed for use with Amazon EC2 instances. EBS volumes can be attached to EC2 instances and used as primary storage for data that requires low-latency access. EBS is a good choice if you need to run applications that require high IOPS or low latency, such as databases or transactional workloads.

Amazon EFS - Amazon Elastic File System (EFS) is a scalable and fully managed file storage service that is designed for use with Amazon EC2 instances. EFS provides shared access to files and can be used for a wide range of use cases, such as content management, web serving, and data analytics. EFS is a good choice if you need a file system that can be accessed from multiple EC2 instances or if you need to store and share data across multiple applications.

Conclusion

Ultimately, the best storage service for your use case will depend on factors such as the type of data you need to store, the performance requirements of your applications, and the level of accessibility and durability that you need.

“P.S. If you read it till the end, Thank you!

Follow me for cloud and AWS content, I ll be back with another interesting topic about AWS

If you have question you can reach me in linked Gnanaprakasam Venkatesan | LinkedIn

This article is part of AWS Career Growth Program (AWS-CGP) by Pravin Mishra

For more AWS related content please visit the website.”

Comments

Post a Comment